In an era dominated by data, the ability to extract meaningful insights from vast datasets is more critical than ever. As businesses, researchers, and data enthusiasts strive to harness the power of data analysis, the tools at their disposal can make all the difference. Among these tools, SciPy stands out as a cornerstone library in the Python ecosystem, offering a wealth of functions designed for scientific and technical computing. Whether you are working on statistical analysis, optimization, or signal processing, mastering key SciPy functions can significantly enhance your analytical capabilities.

In this blog post, we will delve into the top 10 SciPy functions that are essential for data analysis projects. We will explore how these functions can simplify complex tasks, improve efficiency, and enable more accurate results. From statistical tests to interpolation and numerical integration, you will gain a comprehensive understanding of the functionalities that SciPy provides. By the end of this post, you will be equipped with the knowledge to leverage these powerful tools in your data analysis endeavors, enabling you to extract insights that drive informed decision-making.

Step-by-Step Instructions

SciPy is a powerful library in Python that provides numerous functions for scientific computing and data analysis. In this guide, we will explore ten essential SciPy functions that can help you with data analysis projects. We will start with the basics and gradually move toward more complex functionalities. Let’s dive in!

Step 1: Install SciPy

Before we begin, ensure you have SciPy installed. You can install it using pip:

pip install scipy

Step 2: Import Necessary Libraries

To use SciPy, you need to import it along with NumPy, which is often used in conjunction with SciPy:

import numpy as np

from scipy import stats

Step 3: scipy.stats.describe

The describe function provides a summary of a given dataset, including mean, variance, skewness, and kurtosis.

Example:

data = np.random.normal(loc=0, scale=1, size=1000)

summary = stats.describe(data)

print(summary)

Step 4: scipy.stats.ttest_ind

The ttest_ind function performs an independent two-sample t-test to compare the means of two datasets.

Example:

data1 = np.random.normal(loc=0, scale=1, size=100)

data2 = np.random.normal(loc=0.5, scale=1, size=100)

t_stat, p_value = stats.ttest_ind(data1, data2)

print(f”T-statistic: {t_stat}, P-value: {p_value}”)

Step 5: scipy.stats.pearsonr

The pearsonr function computes the Pearson correlation coefficient and the p-value for testing non-correlation between two datasets.

Example:

x = np.random.rand(100)

y = 2 * x + np.random.normal(0, 0.1, 100)

corr_coefficient, p_value = stats.pearsonr(x, y)

print(f”Pearson correlation coefficient: {corr_coefficient}, P-value: {p_value}”)

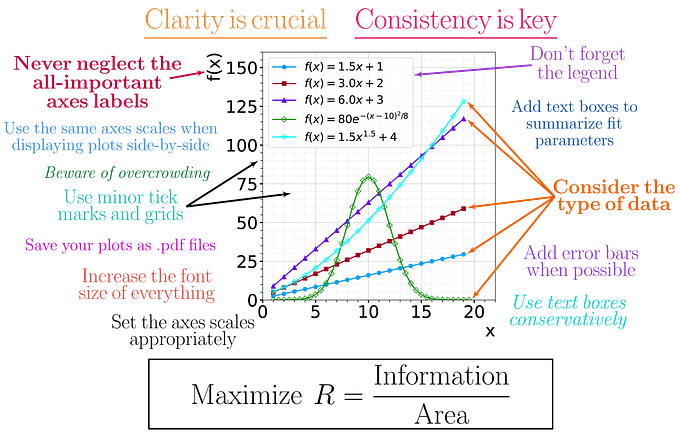

Step 6: scipy.optimize.curve_fit

The curve_fit function helps fit a curve to your data using non-linear least squares.

Example:

from scipy.optimize import curve_fit

def model(x, a, b):

return a * x + b

x_data = np.array([1, 2, 3, 4])

y_data = np.array([1.1, 2.0, 3.1, 4.2])

params, _ = curve_fit(model, x_data, y_data)

print(f”Fitted parameters: {params}”)

Step 7: scipy.integrate.quad

The quad function computes the definite integral of a function.

Example:

from scipy.integrate import quad

def f(x):

return x**2

integral, error = quad(f, 0, 1)

print(f”Integral of x² from 0 to 1: {integral}”)

Step 8: scipy.signal.find_peaks

The find_peaks function helps identify peaks in a dataset.

Example:

from scipy.signal import find_peaks

data = np.array([1, 3, 2, 5, 4, 6, 3, 7, 5])

peaks, _ = find_peaks(data)

print(f”Indices of peaks: {peaks}”)

Step 9: scipy.linalg.inv

The inv function computes the inverse of a matrix.

Example:

from scipy.linalg import inv

matrix = np.array([[1, 2], [3, 4]])

inverse_matrix = inv(matrix)

print(f”Inverse of the matrix:\n{inverse_matrix}”)

Step 10: scipy.spatial.distance.euclidean

The euclidean function computes the Euclidean distance between two points in space.

Example:

from scipy.spatial import distance

point1 = np.array([1, 2])

point2 = np.array([4, 6])

dist = distance.euclidean(point1, point2)

print(f”Euclidean distance between points: {dist}”)

In this guide, we introduced ten essential SciPy functions that are invaluable for data analysis. From basic statistical descriptions to advanced fitting and optimization techniques, these functions can help you efficiently analyze and interpret your data. Remember to explore the extensive SciPy documentation for more advanced functionalities and applications!

Real-World Applications

In the fast-paced world of data analysis, the ability to quickly extract insights from complex datasets can make or break a business. The SciPy library, with its robust suite of functions, empowers analysts and data scientists to tackle a variety of challenges across different industries. Let’s delve into some of the most significant real-world applications of the top 10 SciPy functions and explore how they shape decision-making processes in various sectors.

1. Statistical Analysis (scipy.stats)

In healthcare, statistical analysis is paramount for understanding patient outcomes. For instance, researchers might use scipy.stats to conduct hypothesis testing on clinical trial results, determining if a new medication is more effective than the standard treatment. A notable case is the study of a novel cancer drug, where researchers employed t-tests and ANOVA through SciPy to validate their findings, leading to a breakthrough in treatment protocols.

2. Optimization (scipy.optimize)

Businesses often face the challenge of resource allocation. The logistics industry, for example, utilizes scipy.optimize to streamline delivery routes. A logistics company implemented linear programming techniques to minimize transportation costs, resulting in a 20% reduction in fuel expenses, which not only boosted profits but also lowered their carbon footprint.

3. Interpolation (scipy.interpolate)

In finance, predicting stock prices can be a daunting task. Analysts might use scipy.interpolate to create smooth curves from historical stock data, allowing them to forecast future trends. One investment firm successfully employed spline interpolation to estimate missing values in their datasets, leading to more informed trading decisions and improved portfolio performance.

4. Fourier Transform (scipy.fft)

Signal processing is critical in telecommunications. With scipy.fft, engineers can analyze frequency components of signals to improve transmission quality. For example, a telecommunications company used the Fast Fourier Transform to filter out noise from communication signals, enhancing clarity and reliability for millions of users.

5. Integration (scipy.integrate)

Environmental scientists often need to calculate areas under curves to assess pollution levels over time. Using scipy.integrate, they can numerically integrate data points collected from air quality sensors. A recent study demonstrated how numerical integration helped track the effectiveness of regulations on reducing airborne particulates, leading to policy changes that improved public health.

6. Linear Algebra (scipy.linalg)

In machine learning, many algorithms rely on linear algebra for model training. The scipy.linalg module provides functions for matrix operations that are crucial in algorithms like Principal Component Analysis (PCA). A tech startup used these linear algebra tools to reduce dimensionality in their dataset, significantly speeding up their machine learning model training and enhancing predictive accuracy.

7. Clustering (scipy.cluster)

Market segmentation is vital for targeted marketing strategies. Retail companies often deploy clustering techniques from scipy.cluster to identify customer segments based on purchasing behavior. A notable case involved a major retailer using hierarchical clustering to tailor promotions, resulting in a 15% increase in sales during campaigns.

8. Image Processing (scipy.ndimage)

In the field of medical imaging, image processing is crucial for accurate diagnostics. Utilizing scipy.ndimage, radiologists enhance and analyze MRI scans, aiding in the detection of abnormalities. A hospital adopted these techniques to improve their diagnostic accuracy, ultimately leading to better patient outcomes and faster treatment plans.

9. Spatial Analysis (scipy.spatial)

Urban planners frequently rely on spatial analysis to design efficient city layouts. By employing scipy.spatial, they can analyze geographical data to optimize public transportation routes. A city council used these spatial tools to improve bus routes, resulting in a 30% increase in public transport usage and a reduction in traffic congestion.

10. Signal Processing (scipy.signal)

In the field of audio engineering, scipy.signal is invaluable for filtering and analyzing sound. Audio engineers use these functions to enhance music tracks by removing unwanted noise. A music production company used these techniques to restore classic recordings, preserving important cultural artifacts while improving sound quality.

The versatility of SciPy and its functions is evident in their widespread application across various industries. By leveraging these powerful tools, professionals can derive meaningful insights, streamline operations, and foster innovation. As data continues to play a pivotal role in shaping our world, the importance of mastering such functions cannot be overstated. Embracing SciPy not only enhances analytical capabilities but also empowers organizations to make data-driven decisions that can lead to profound impacts in their fields.

Interactive Projects

Engaging with material through practical projects is one of the most effective ways to solidify your understanding of concepts and tools. By applying the functions you’ve learned in real-world scenarios, you not only reinforce your knowledge but also gain valuable experience that can enhance your data analysis skills. Here are some interactive project ideas that will help you dive deeper into the top 10 SciPy functions for data analysis.

1. Statistical Analysis of a Dataset

Objective: Use SciPy to perform statistical analysis on a dataset, such as the famous Iris dataset.

Steps:

- Load the Dataset: Use Pandas to load the Iris dataset.

import pandas as pd

iris = pd.read_csv(‘https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data', header=None)

- Explore Descriptive Statistics: Use scipy.stats.describe to get descriptive statistics.

from scipy import stats

stats.describe(iris.iloc[:, :-1])

- Conduct Hypothesis Testing: Perform a t-test to compare the means of two species.

species_setosa = iris[iris[4] == ‘Iris-setosa’][0]

species_versicolor = iris[iris[4] == ‘Iris-versicolor’][0]

t_stat, p_value = stats.ttest_ind(species_setosa, species_versicolor)

print(f”T-statistic: {t_stat}, P-value: {p_value}”)

- Expected Outcome: Understand differences in measurements between species and interpret the results of the t-test.

2. Curve Fitting with SciPy

Objective: Fit a curve to a set of data points and visualize the results.

Steps:

- Generate Synthetic Data: Create a set of noisy data points.

import numpy as np

x = np.linspace(0, 10, 100)

y = 2 * np.sin(x) + np.random.normal(0, 0.5, x.size)

- Define a Model Function: Create a function that represents the model you want to fit.

def model_func(x, a, b):

return a * np.sin(b * x)

- Use curve_fit: Fit the model to your data.

from scipy.optimize import curve_fit

params, covariance = curve_fit(model_func, x, y, p0=[2, 1])

- Visualize the Fit: Plot the original data and the fitted curve.

import matplotlib.pyplot as plt

plt.scatter(x, y, label=’Data’)

plt.plot(x, model_func(x, *params), color=’red’, label=’Fitted Curve’)

plt.legend()

plt.show()

- Expected Outcome: Gain insight into how well your model describes the data and understand the fitting parameters.

3. Image Processing with SciPy

Objective: Apply filters to an image using SciPy.

Steps:

- Load an Image: Use scipy.ndimage to read an image.

from scipy import ndimage

import matplotlib.pyplot as plt

image = ndimage.imread(‘path_to_image.jpg’, mode=’L’) # Load in grayscale

- Apply a Gaussian Filter: Smooth the image using the Gaussian filter.

smoothed_image = ndimage.gaussian_filter(image, sigma=3)

- Display the Original and Smoothed Images:

plt.subplot(1, 2, 1)

plt.title(‘Original Image’)

plt.imshow(image, cmap=’gray’)

plt.subplot(1, 2, 2)

plt.title(‘Smoothed Image’)

plt.imshow(smoothed_image, cmap=’gray’)

plt.show()

- Expected Outcome: Observe the effects of the Gaussian filter on image quality and learn basic image processing techniques.

4. Optimization of a Function

Objective: Use SciPy to find the minimum of a mathematical function.

Steps:

- Define a Function: Create a simple quadratic function.

def objective_function(x):

return (x — 3) ** 2 + 1

- Use minimize: Apply the minimize function from SciPy to find the minimum.

from scipy.optimize import minimize

result = minimize(objective_function, x0=0) # Starting guess

print(f”Minimum value: {result.fun} at x = {result.x}”)

- Expected Outcome: Understand how optimization works and the importance of choosing initial guesses.

5. Fourier Transform for Signal Analysis

Objective: Analyze a signal using the Fast Fourier Transform (FFT).

Steps:

- Create a Signal: Generate a sine wave with noise.

fs = 500 # Sampling frequency

t = np.arange(0, 1, 1/fs)

freq = 5 # Frequency of the sine wave

signal = np.sin(2 * np.pi * freq * t) + 0.5 * np.random.normal(size=t.shape)

- Compute the FFT:

from scipy.fft import fft

fft_result = fft(signal)

- Visualize the Frequency Spectrum:

freq_axis = np.fft.fftfreq(len(signal), 1/fs)

plt.plot(freq_axis, np.abs(fft_result))

plt.title(‘Frequency Spectrum’)

plt.xlabel(‘Frequency (Hz)’)

plt.ylabel(‘Magnitude’)

plt.show()

- Expected Outcome: Gain insights into the frequency components of the signal and how FFT can be used in signal processing.

These projects will not only help you understand the top 10 SciPy functions for data analysis but also provide you with hands-on experience that you can apply in your analysis work. Don’t hesitate to modify the projects, experiment with different datasets, or combine ideas to create something unique. Happy coding!

Supplementary Resources

As you explore the topic of ‘Top 10 SciPy Functions for Data Analysis’, it’s crucial to have access to quality resources that can enhance your understanding and skills. Below is a curated list of supplementary materials that will provide deeper insights and practical knowledge:

SciPy Beginners Guide: https://numpy.org/doc/stable/user/absolute_beginners.html

Data Analysis with SciPy: https://realpython.com/python-data-cleaning-numpy-pandas/

Continuous learning is key to mastering any subject, and these resources are designed to support your journey. Dive into these materials to expand your horizons and apply new concepts to your work.

Elevate Your Python Skills Today!

Transform from a beginner to a professional in just 30 days with Python Mastery: From Beginner to Professional in 30 Days. Start your journey toward becoming a Python expert now. Get your copy on Amazon.

Explore More at Tom Austin’s Hub!

Dive into a world of insights, resources, and inspiration at Tom Austin’s Website. Whether you’re keen on deepening your tech knowledge, exploring creative projects, or discovering something new, our site has something for everyone. Visit us today and embark on your journey!